There was an event recently where a security firm discovered a zero-day vulnerability in Palo Alto Networks’ GlobalProtect VPN. They waited almost a year to disclose the vulnerability to the vendor and exploited this vulnerability in their red-team customers during this time.

There were clearly some passionate people arguing both for and against the practice. Vulnerability disclosure is basically like Victorian Rules of etiquette where, whatever you do you are bound to make someone mad. I am going to try to avoid making a value judgement on whether the decisions in this specific case were wrong or right. Instead, I would like to recap the conversation and quantify the risk to the larger community of holding onto zero days and not disclosing and hopefully advance the conversation.

The main argument for their action (that I found) is based on the premise that:

- Vulnerability management practices are failing in and for organizations. Therefore,

- Using zero-days in a simulated attack allows exercising controls beyond vulnerability remediation and tests the customers ability to detect and/or respond to these attacks in real-world scenarios.

- Finally, leveraging zero-days in offensive security gives the simulated attackers access that may be otherwise unavailable,

- Bonus point: whoever discovers the vulnerability owns it, and can choose to do whatever they want with it so back off..

I’ll walk through each of these…

- I agree there are challenges with vulnerability management (VM) and I have studied it quite a bit. , But that doesn’t justify dismissing or diminishing the practice (search for “vulnerability management hamster wheel”). “Defense in depth” has been around forever and I’m fairly confident anyone who is breached because of a known vulnerability is ridiculed heavily. But my point is that it is not an either/or, we as an industry can focus on both detection and prevention. Plus, why are zero-days even needed if vulnerability management is failing? Shouldn’t there be plenty of entry points without resorting to zero-days?

- From what I understand, using zero-days is not the only method to do this testing. It may not be a perfect replacement, but is possible to work with the client and work out simulated access. I think Lesley Carhart and Wendy Nather captured these first two points best:

- One of the details in this case is that the level of access granted by the exploit was different than what the client could have granted even if they wanted to:

But Tavis Ormandy had thoughts on that (in a different twitter thread):

But Tavis Ormandy had thoughts on that (in a different twitter thread):

- I am still struggling with the point that the researcher “owns” the vulnerability and can do with it what they want and I don’t have any direct counter to this. I’d like to understand this point a bit more. I think my objection is on an ethical level, which is of course, subjective. But I wonder if some analogies may work here. I mean, if a person owns a gun, they can’t just do what they want with it, right? (was that analogy too… “loaded”?) maybe it’s more like discovering a flaw in a children’s toy that may cause harm, or maybe it’s more like a researcher discovered a new strain of a virus (ya know, purely hypothetical) and just sat on it, or somehow profited off of it at the probable expense of others. These analogies are clearly imperfect, but I would hope if someone found some flaw that would potentially harm the larger community that they would act responsibly and ethically. But again, I am not well versed on the intricacies and history of this particular perspective.

Benefit versus Cost

The section above looked at the benefits of working with zero-days, and even though I offered some counter points, it’s clear that offensive security practitioners, and probably their clients, benefit from the practices of zero-day research and utilization in red team engagements. But at what cost? What are the arguments against doing the research to discover a zero-day, and then holding on to it for some duration and even utilizing it?

I started pulling together various points to answer that question, but I’ve arrived at a single argument: co-discovery. Basically the belief that every vulnerability is able to discovered by more than person. And when someone discovers a vulnerability, there is some non-zero chance that it will be independently discovered by another researcher.

What is the chance of co-discovery?

I’ll be honest here, the data isn’t all that great around this question and we’ll have to do a little “what if” math here. But my conclusion is this: if this practice becomes wide-spread and every (or even most) pentest or red team consulting company developed and held on to even a few zero-days each, then it’s near-certain some of those will be used by malicious actors in real world attacks. Which means any vulnerability equities process should probably focus more on the impact side than the likelihood of co-discovery.

We do have some data on co-discovery though. First, Bruce Schneier has a post from 2016 that calls out that heartbleed was independently discovered by both Codenomicon and Google. But one thing to take away from that brief article is that the probability of co-discovery is non-zero. It’s also worth noting that co-discovery is not just a vulnerability thing.

We have another data source from a post in 2017 and data from a set of eight different CTF events from 2013-2016. And if I understood the data correctly, there were 228 vulnerabilities across all events, of which 75% (171) were discovered by more than one competing team. But these events varied greatly in size and complexity. It would be difficult to use this data as is, and it seems like focusing multiple teams on a single target may inflate the rate of co-discovery. Therefore, I don’t think 75% is likely to represent the rate of co-discovery in the real world, but it’s still an interesting reference point.

Luckily, we have a study that I think we can leverage thanks to Lilian Ablon and Andy Bogart and their paper titled, “Zero Days, Thousands of Nights: The Life and Times of Zero-Day Vulnerabilities and Their Exploits” in which they shared two relevant statistics about co-discovery (which the authors call “overlap” and “collision”).

“We found a median value of 5.76 percent overlap … given a 365-day time interval, and a median value of 0.87 percent overlap … given a 90-day time interval.” and they make the conclusion, “…our data show a relatively low collision rate.” But is it low? Let’s run some scenarios based on that rate given that many individual private companies may be generating and storing zero-day exploits.

Time for some math…

We are going to make some assumptions here and yes, there are all sorts of qualifications and exceptions to these estimations. Not all vulns are the same, some will be way easier to find, some require more expertise, some products are more popular, and so on. But let’s just assume there is some number of (offensive security) consulting companies and they vary around an average number of zero-day vulnerabilities that they hold on to. Whatever single set of numbers we pick, we will absolutely be wrong, but we may be able to establish some reference points here. For example, if we start with gross overestimations and there still isn’t a problem, that may support the practice and vice versa, if we way under estimate and it’s bad, we should reconsider.

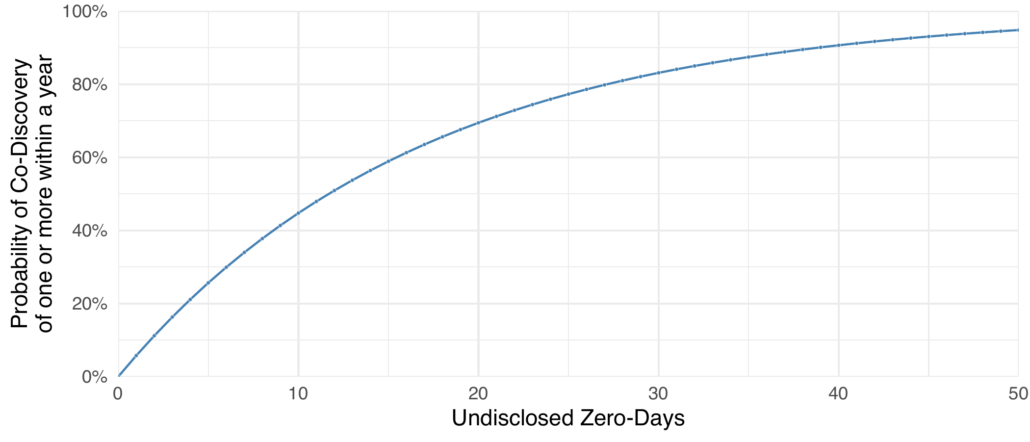

I am going to start with a gross underestimation (because I know where this is going) and assume there are only 10 red teaming companies in the world, and each keeps a stock-pile of five (5) zero-days. Sure there may be hundreds if not thousands of consulting companies sitting on undisclosed vulnerabilities, but let’s see where this leads us. What would that mean to the larger community? Well, that’s 50 zero-days and if there is a 5.7% chance of co-discovery in the first year (remember the story at the beginning the firm held their zero-day for almost a year). That puts the chance of co-discovery of one or more of those vulnerabilities at about 95%! In other words, there is a very high chance at least one of those 10 companies is holding onto a zero-day that malicious actors may be exploiting in the wild. We can also look at other values: there is an 80% chance that two or more are being exploited and 55% that three or more are being exploited.

Let’s think about that for a second, with only 10 companies holding on to 5 zero-days each, there is a better than even chance that three of the 50 zero-days are co-discovered and may be used by malicious actors in the wild. Is that acceptable? In fact, it only takes 12 zero-days before there is a better than even chance at least one of them will be exploited in the wild. The following chart shows the relationship between the number of zero-day vulnerabilities and the probability of co-discovery in the first year (according to the 5.7% from Ablon and Bogart).

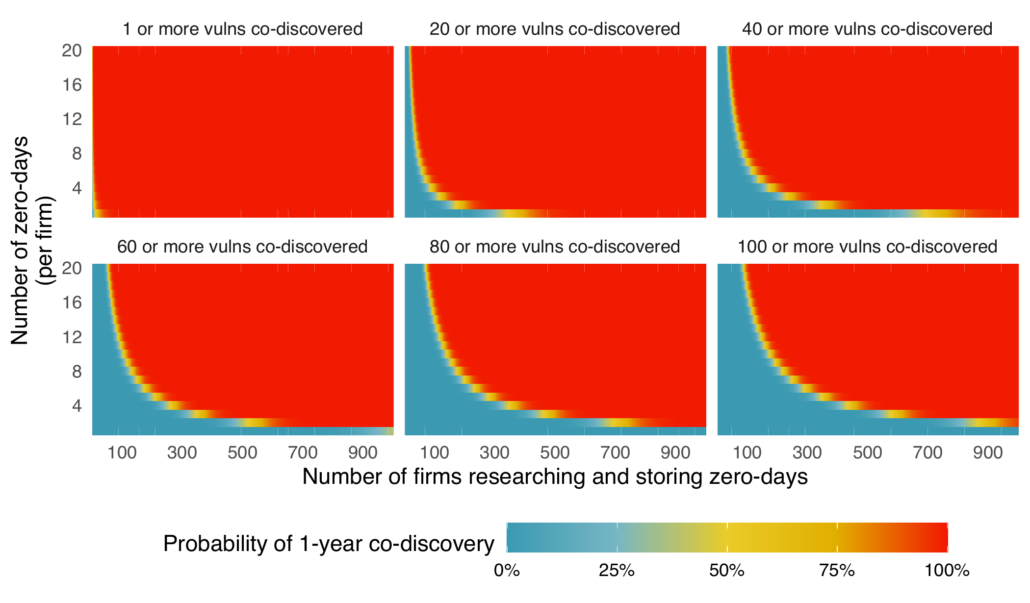

It won’t take much before someone is holding onto a zero-day actively being exploited in the wild… But what about a more realistic estimate of firms and zero-days? And we can even get fancy and estimate the average is somewhere between 1 and 20 stored zero-days and the number of companies could be up to 1,000. What do the probabilities look like? The next figure attempts to build our intuition around the relationship between the number of firms, number of zero-days at each firm, and then the probability of exceeding some number of vulnerabilities being co-discovered.

The way to read the above chart is that each panel is showing the probability of some minimum number of zero-days being co-discovered. For example, If we are curious about the probability of co-discovering 40 or more zero-days, we’d look at the upper right plot and we could look for 300 in the horizontal axis (if we want to assume that 300 private companies are storing zero-days) and then scan up to about the third row (assuming they store an average of 3 zero-days), there would be a high probability 40 or more of those zero-days would be co-discovered (96.5% chance to be exact).

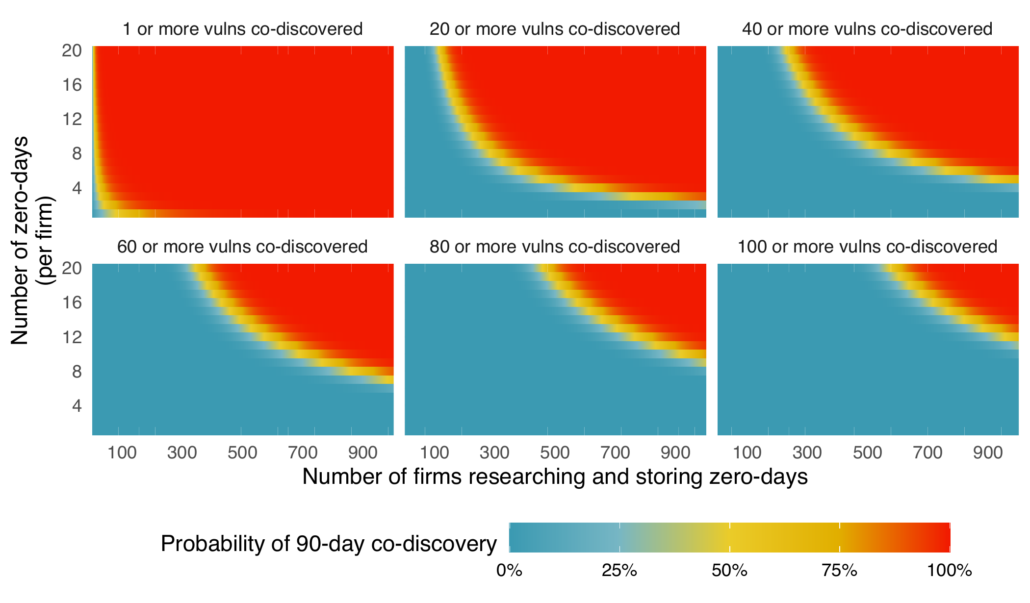

There is one more thing I want to explore… What if the estimate of 5.7% is too high? Or 1 year is too long? Let’s do this again, but with the 90-day estimate from Ablon and Bogart’s zero-day research. At 0.87% chance of co-discovery, surely at that clearly “low” rate, we couldn’t see much overlap, could we? The next plot answers that in the same way as the previous plot.

It is much different with the lower probability, but again, it takes just 80 zero-days at 0.87% chance before we have better than an even chance at least one will be co-discovered. That is about 40 private firms globally holding on to just 2 zero-days each for 90 days. But stop and think to yourself, how many private companies (and independent researchers) are researching vulnerabilities and how long are they holding on to them before disclosing?

Wrapping up

We do not need to be overly concerned about any single firm researching and storing zero-days. But we should be very concerned if this is (or becomes) a global, wide-spread practice. If every (or even most) pentest or red team consulting company developed and held on to even a few zero-days each, then it’s near-certain some of those will be co-discovered by others and potentially (probably?) used by malicious actors in real world attacks. Which means any vulnerability equities process should probably focus more on the impact side than the probability of co-discovery, because even a low probability like 0.87% co-discovery rate isn’t very low at a large (internet) scale of zero-days being researched and stored. So if you find, ya know, hypothetically speaking, an RCE in a popular internet-facing VPN, please consider immediate responsible and coordinated disclosure and don’t make it common practice across the industry to sit on it for almost a year.

Leave a Reply

Want to join the discussion?Feel free to contribute!